Running the Sigma collector on-premise

Note

The latest version of the Collector is 2.316. To view the release notes for this version and all previous versions, please go here.

Generating the command or YAML file

This section walks you through the process of generating the command or YAML file for running the collector from Windows or Linux or MAC OS.

To generate the command or YAML file:

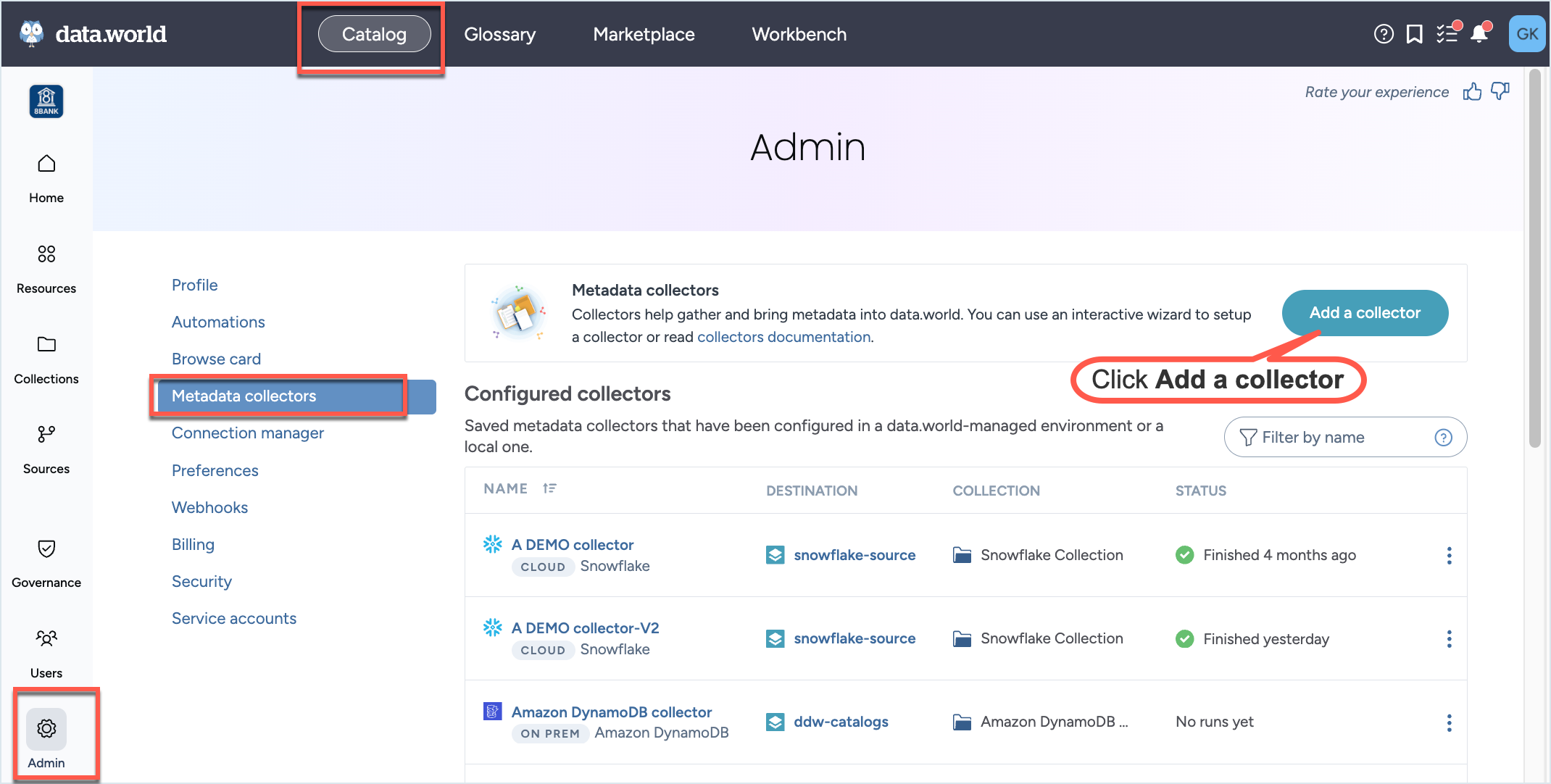

In the Catalog experience, go to the Admin page > Metadata collectors section.

Click the Add a collector button.

On the Choose metadata collector screen, select the correct metadata source. Click Next.

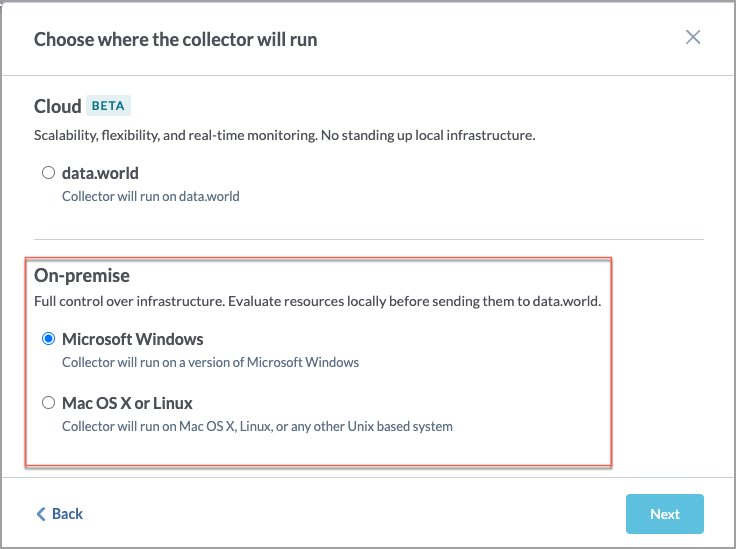

On the Choose where the collector will run screen, in the On-premise section, select if you will be running the collector on Windows or Mac OS or Linux. This will determine the format of the YAML and CLI that is generated in the end. Click Next.

On the On-prem collector setup prerequisites screen, read the pre-requisites and click Next.

On the Configure an on-premises Sigma Collector screen, set the following properties and click Next.

On the next screen, set the following properties and click Next.

Table 2.Field name

Corresponding parameter name

Details

Required?

Sigma API Token

--sigma-api-token=<apiToken>

The Sigma API Token associated with the user account

Yes

Sigma Client ID

--sigma-clientid=<clientId>

The Sigma Client ID associated with the user account

Yes

Include Sigma Workbook(s)

--workbook-include=<includedWorkbookIds>

Provide workbook IDs. This value is available in the workbook URL. For example, if the workbook URL is of the form: https://app.sigmacomputing.com/<accountname>/workbook/<hyphenated-workbook- name>-<workbookId>, woorkbook ID can be easily copied as it is from the url. This includes the specified Sigma workbook's contents in thecatalog. Use the parameter multiple times for multiple workbooks.

For example: --workbook-include=" workbook1Id" --workbook-include="workbook2Id"

No

Exclude Sigma Workbook(s)

--workbook-exclude=<excludedWorkbookIds>

Provide workbook IDs. This value is available in the workbook url. For example, if the workbook URL is of the form: https://app.sigmacomputing. com/<accountname>/workbook/<hyphenated-workbook- name>-<workbookId> , woorkbook ID can be easily copied as it is from the URL. This includes the specified Sigma workbook's contents in the catalog. Use the parameter multiple times for multiple workbooks.

For example: --workbook-exclude=" workbook1Id" --workbook-exclude="workbook2Id".

No

On the next screen set the following advanced properties. Click Next.

Table 3.Field name

Corresponding parameter name

Details

Required?

Disable lineage collection

--disable-lineage-collection

Skip harvesting lineage metadata from Sigma.

No

Page size for Sigma API responses

--pagination-limit

Specify the page size for Sigma API response. The maximum value you can set is 1000. If you do not specify a value, the default page size is 25.

No

Host endpoint where Sigma is hosted

--sigma-api-hostname=<apiHostname>

The endpoint of the cloud platform where Sigma is hosted. For example:

For Amazon AWS: https://aws-apisigmacomputing.com

For Azure: https://api.us.azure.sigmacomputing.com

For Google Cloud: https://api.sigmacomputing.com

Yes

(required if Cloud provider where Sigma is hosted is not set)

Cloud provider where Sigma is hosted

--sigma-cloud-provider=<cloudProvider>

The initials of the cloud platform where Sigma is hosted. Accepted values: aws, azure or gcp.

Yes

(required if Host endpoint where Sigma is hosted is not set)

Include Sigma Workspace(s)

--workspace-include=<includedWorkspace>

Provide workspace names. Specify the workspaces to be collected, using either a workspace name or a regular expression to match. Use the parameter multiple times for multiple workspaces.

For example: --workspace-include="workspaceA" --workspace-include="workspaceB".

No

Exclude Sigma Workspace(s)

--workspace-exclude=<excludedWorkspace>

Provide workspace names. Specify the workspaces and contents to exclude from being cataloged, using either a workspace name or a regular expression to match. Use the parameter multiple times for multiple workspaces.

For example: --workspace-exclude="workspaceA" --workspace-exclude="workspaceB".

No

Max retries

--api-max-retries=<maxRetries>

Specify the amount of times to retry an API call which has failed. The default value is 5.

No

Retry delay

--api-retry-delay=<retryDelay>

Specify the amount of time in seconds to wait between retries of an API call which has failed. The default is to try increasing intervals starting at 2 seconds.

No

API HTTP header

--api-http-header

Specify name-value pairs that the collector will include as HTTP headers in any calls to the HTTP API used by the collector to harvest metadata. Use the option multiple times for multiple headers.

Note: Use this option only after consulting the data.world Support team.

No

On the next screen, provide the Collector configuration name and an optional Configuration description. This is the name used to save the configuration details. The configuration is saved and made available on the Metadata collectors summary page from where you can edit or delete the configuration at a later point. Click Save and Continue.

On the Finalize your collector configuration screen, you are notified about the environment variables and directories you need to setup for running the collector. Select if you want to generate Configuration file ( YAML) or Command line arguments (CLI). Click Next.

Important

You must ensure that you have set up these environment variables and directories before you run the collector.

The next screens gives you an option to download the YAML configuration file or copy the CLI command.

If you selected Command line arguments (CLI), from the Choose how to run the collector dropdown, select Java command or Docker command and note down the generated command. Click Done.

If you selected Configuration file ( YAML), download the generated a YAML file. Click Next.

The final screen displays the command to use for running the collector with the YAML file. From the Choose how to run the collector dropdown, select Java command or Docker command, and note down the generated command.

You will notice that the YAML/CLI has following additional parameters that are automatically set for you.

Warning

Except for the collector version, you should not change the values of any of the parameter listed here.

Table 4.Parameter name

Details

Required?

-a= <agent>

--agent= <agent>

--account= <agent>

The ID for the data.world account into which you will load this catalog - this is used to generate the namespace for any URIs generated.

Yes

--site= <site>

This parameter should be set only for Private instances. Do not set it for public instances and single-tenant installations. Required for private instance installations.

Yes (required for private instance installations)

-U

--upload

Whether to upload the generated catalog to the organization account's catalogs dataset.

Yes

--no-log-upload

Do not upload the log of the collector run to a dataset in data.world. This is the same dataset where the collector output is uploaded. By default, log files are uploaded to this dataset.

Yes

dwcc: <CollectorVersion>

The version of the collector you want to use (For example,

datadotworld/dwcc:2.248)Yes

Add the following additional parameter to test run the collector.

--dry-run: If specified, the collector does not actually harvest any metadata, but just checks the connection parameters provided by the user and reports success or failure at connecting.

We recommend enabling debug level logs when running the collector for the first time. This approach aids in swiftly troubleshooting any configuration and connection issues that might arise during collector runs. Add the following parameter to your collector command:

-e log_level=DEBUG: Enables debug level logging for collectors.

Verifying environment variables and directories

Verify that you have set up all the required environment variables that were identified by the Collector Wizard before running the collector. Alternatively, you can set these credentials in a credential vault and use a script to retrieve those credentials.

Verify that you have set up all the required directories that were identified by the Collector Wizard.

Running the collector

Important

Before you begin running the collector make sure you have completed all the pre-requisite tasks.

Running collector using YAML file

Go to the machine where you have setup docker to run the collector.

Place the YAML file generated from the Collector wizard to the correct directory.

From the command line, run the command generated from the application for executing the YAML file. Here is a sample Docker command. Similarly, you can get the Java command from the UI.

Caution

Note that is just a sample command for showing the syntax. You must generate the command specific to your setup from the application UI.

docker run -it --rm --mount type=bind,source=${HOME}/dwcc,target=/dwcc-output \ --mount type=bind,source=${HOME}/dwcc,target=/app/log -e DW_AUTH_TOKEN=${DW_AUTH_TOKEN} \ -e DW_SIGMA_TOKEN=${DW_SIGMA_TOKEN} datadotworld/dwcc:2.185 --config-file=/dwcc-output/config-sigma.ymlThe collector automatically uploads the file to the specified dataset and you can also find the output at the location you specified while running the collector. Similarly, the log files are uploaded to the specified dataset and can be found in the directory mounted to target=/app/log specified in the command.

If you decide in the future that you want to run the collector using an updated version, simply modify the collector version in the provided command. This will allow you to run the collector with the latest version.

Running collector without the YAML file

Go to the machine where you have setup docker to run the collector.

From the command line, run the command generated from the application. Here is a sample Docker command. Similarly, you can get the Java command from the UI.

Caution

Note that is just a sample command for showing the syntax. You must generate the command specific to your setup from the application UI.

docker run -it --rm --mount type=bind,source=${HOME}/dwcc,target=/dwcc-output \ --mount type=bind,source=${HOME}/dwcc,target=/app/log datadotworld/dwcc:2.185 \ catalog-sigma --collector-metadata=config-id=48678358-e53e-43fa-8783-c468013dd07b \ --agent=initech --api-token=${DW_AUTH_TOKEN} --upload=true --name="Sigma Collection" \ --output=/dwcc-output --upload-location=ddw-sandbox --sigma-api-token=${DW_SIGMA_TOKEN} \ --sigma-clientid=2322 --sigma-cloud-provider=awsThe collector automatically uploads the file to the specified dataset and you can also find the output at the location you specified while running the collector. Similarly, the log files are uploaded to the specified dataset and can be found in the directory mounted to target=/app/log specified in the command.

If you decide in the future that you want to run the collector using an updated version, simply modify the collector version in the provided command. This will allow you to run the collector with the latest version.

Automating updates to your metadata catalog

Maintaining an up-to-date metadata catalog is crucial and can be achieved by employing Azure Pipelines, CircleCI, or any automation tool of your preference to execute the catalog collector regularly.

There are two primary strategies for setting up the collector run times:

Scheduled: You can configure the collector according to the anticipated frequency of metadata changes in your data source and the business need to access updated metadata. It's necessary to account for the completion time of the collector run (which depends on the size of the source) and the time required to load the collector's output into your catalog. This could be for instance daily or weekly. We recommend scheduling the collector run during off-peak times for optimal performance.

Event-triggered: If you have set up automations that refresh the data in a source technology, you can set up the collector to execute whenever the upstream jobs are completed successfully. For example, if you're using Airflow, Github actions, dbt, etc., you can configure the collector to automatically run and keep your catalog updated following modifications to your data sources.

Managing collector runs and configuration details

From the Metadata collectors summary page, view the collectors runs to ensure they are running successfully,

From the same Metadata collectors summary page you can view, edit, or delete the configuration details for the collectors.